Demystifying Artificial Intelligence: Simplifying AI and Machine Learning

Images

Artificial intelligence” (AI) has recently become something of a pop-culture catchphrase, thanks largely to pronouncements by optimistic futurists such as Ray Kurzweil and Patrick Winston,1 who believe machines will be “smarter” than humans by the early 2040s, when humankind will have attained the tipping point known as the “singularity,” as well as by pessimistic doomsayers such as Elon Musk, who suggests that we are “summoning the demon” with AI and that it poses “a fundamental risk to the existence of human civilization.”

Artificial intelligence has also been invoked in silly misinformed predictions by computer science experts such as Geoffrey Hinton2 or the Obamacare architect and healthcare economist, Ezekiel Emanuel, who have suggested that radiologists may be replaced by computers within the next five to 10 years and could “end radiology as a thriving specialty.”3 This has, of course, led to uncertainty, fear, and confusion in the diagnostic radiology community about artificial intelligence, machine learning, deep learning and convolutional networks.

AI terminology

The phrase “artificial intelligence,” which is defined as the capacity of machines to exhibit intelligent behavior, was coined by John McCarthy in 1956. AI has been divided into two types — weak/narrow AI, in which computers perform a very narrow set of tasks, such as driving a car, playing chess, or translating a language — and strong/general AI, in which a computer can reason, plan, think abstractly, and comprehend complex ideas nearly as well as a human. Whether we will achieve general AI within the 21st century, if ever, is a topic of considerable debate.

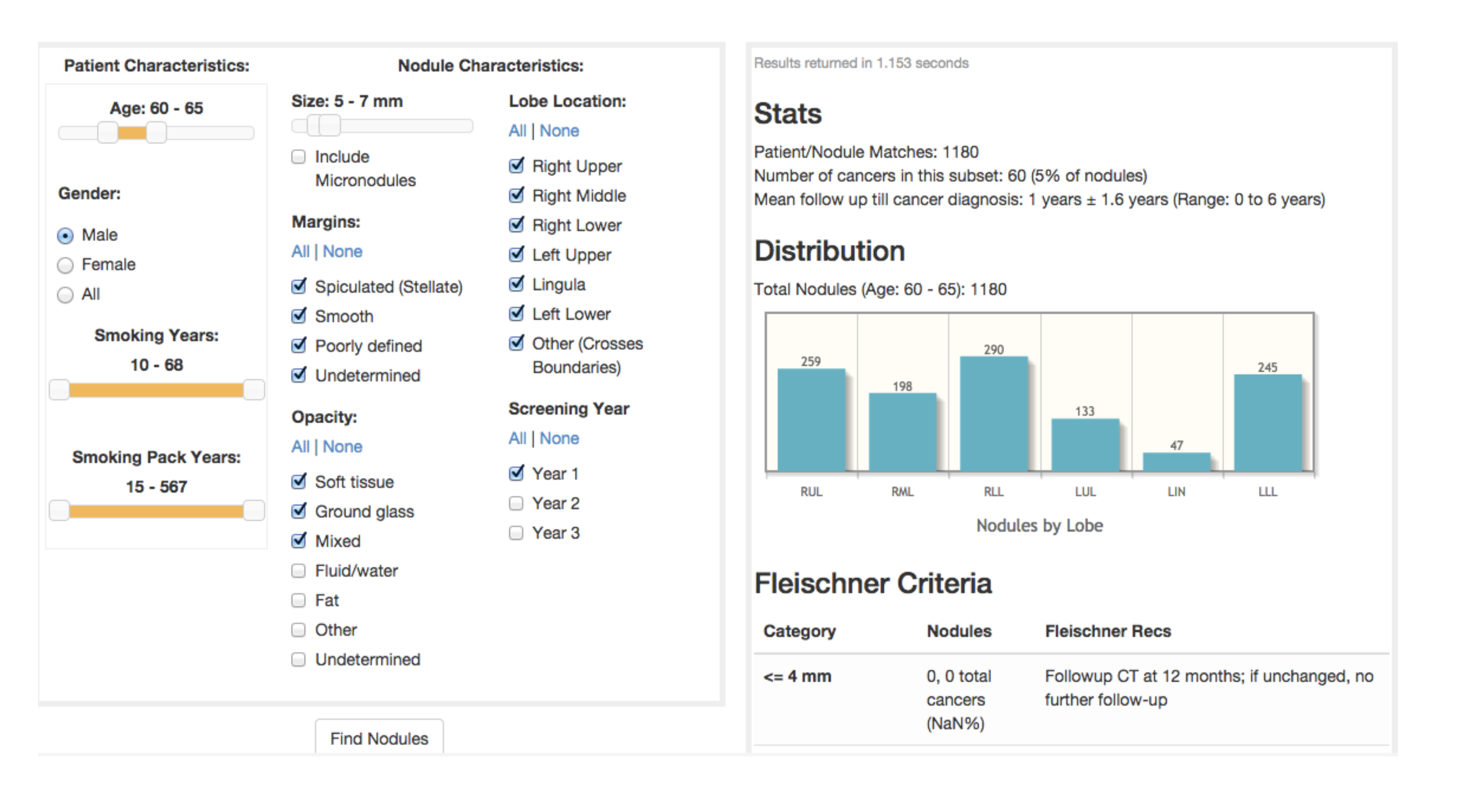

Dozens of advanced statistical techniques that fall under “machine learning” have been around for decades. Machine learning creates a simplified model from a set of data. This model changes (learns) with the addition of new data. Figure 1 presents a decision support tool that utilizes data from the National Lung Screening Trial (NLST) about subjects and their lung nodules. These variables could alternatively serve as machine learning inputs, with the output being “benign” or “malignant.” Intuitively some variables, such as nodule size, have a greater predictive value for malignancy than other variables, such as patient age.

Many authors have utilized data such as that from the NLST to create a regression formula that predicts the likelihood of cancer based on estimating a relative weighting of each of these variables. This represents a very simple example of basic machine learning in which the machine (logistic regression) “learns” and becomes a better predictor of malignancy as the number of subjects/nodules increases. A different regression formula might be applicable for different population datasets, such as that of subjects in the Southwest, where granulomatous disease is prevalent, or of veterans who are more likely to have been exposed to occupational carcinogens or have higher rates of alcohol and tobacco use.

Moving beyond logistic regression, dozens of types of machine learning exist, including support vector machines, k-nearest neighbors, hidden Markov models, and Bayesian belief networks that can improve on prediction performance. The type of machine learning that is the most relevant to recent advances in human vision and radiology is referred to as a “neural network” because of its superficial resemblance to the interconnected neurons in the brain. This term can be misleading because it has led to the popular but erroneous assumption that with neural networks, machines “learn” in a manner similar to humans and that with enough memory and complexity they will achieve human level “intelligence.”

Human neuroanatomy in form and function, however, is much more complex, perhaps much more analogous to a quantum computer. A better way to think of a neural network might be as a large spreadsheet with input variables such as nodule size and smoothness and smoking history on the left, with many columns in the middle and a simple binary output, such as benign or malignant on the right. Each of the cells in the spreadsheet is related to all of the cells to its left. Each cell is adjusted with a weight (multiplier), bias (amount added) and activation function (non-linear factor that can act to turn the cell on or off based on a threshold). Using many iterations over time the weight, bias, and activation function values are progressively fine-tuned until the input variables in a training set most often result in the correct output. Each new subject/nodule, in the NLST example, will result in a modification of these parameters. Once “trained,” the model (“spreadsheet”) can then be saved and used to make predictions for new lung nodules.

Of relevance to medical imaging, these variables in our neural network can each represent a pixel in an image. For example, a 240-by-240-pixel image of a dog or cat can be represented by 240 × 240 or 57,600 variables. Because of the large numbers of variables in a single image, and the fact that pixels close to each other (eg, whiskers) are more important than pixels far apart, a variant of neural networks known as Convolutional Neural Networks (CNNs) is typically utilized for image analysis.

Deep learning

Convolutional neural networks known as complex neural networks have been applied to speech recognition, computer vision, audio translation to achieve what has been referred to as “Deep learning.” What is generically referred to as AI in the lay press and in medical and diagnostic imaging applications actually represents deep learning using neural networks to generate algorithms to make predictions. What has been referred to as “CADx” (Computer Aided Diagnosis) or “CADe” (Computer Aided Detection) typically requires a high level of specialty expertise and many steps requiring months or years of time to develop and fine tune.

What has changed is the ability to generate algorithms directly from large imaging databases with far fewer steps, directly from the data. By lowering the barriers to algorithm development, deep learning will result in a democratization in development of computer software that will in turn result in a major increase in the number developed and this will make it easier for established imaging software developers as well as innovative start-up companies to create useful, potentially game-changing applications to improve efficiency, patient safety, and communication. Deep learning has also been utilized to improve image quality for PET/CT, MRI, CT and other modalities. One recent article, for example, described the potential for a 200-fold dose reduction in FDG dose for PET/CT scanning.4

Major advances in imaging on the horizon

Deep learning, the latest and most promising incarnation of AI, will create major advances in diagnostic imaging in the next five to 10 years. This will occur not because deep learning algorithms are necessarily better than traditional CAD approaches, but because they can be developed much faster and by a wider number of innovative developers. This is due to the ability to turn data directly into a deep learning “model” for prediction, which has led to the proclamation that “data is the new source code.” The emergence of these new “AI” algorithms will foster a “best of breed” paradigm in which radiologists and others will seek out specific algorithms for specific tasks rather than choosing a PACS or workstation and sticking with software only for that system.

Indeed, in a manner analogous to the music industry, imaging departments will not want to buy the whole “record” or “CD” to hear a single song. Instead, they’ll want to download just that selection. This will have a major disruptive influence on PACS and subspecialty workstations and will provide tremendous, innovative sources of new and useful applications.

As it turns out, figuring out what’s wrong with an image is a much more difficult task than simply figuring out what’s in an image in the first place. And a combination of the need for vast amounts of annotated, up-to-date data, along with the certainty of new regulatory hurdles and medico-legal and technical challenges will keep radiologists employed for many years to come.

References

- Creighton J. The “Father of Artificial Intelligence” says singularity is 30 years away. Retrieved from Futurism: https://futurism.com/father-artificial-intelligence-sigularity-decades-away/. Accessed May 1, 2018.

- Mukherjee S. A.I. versus M.D. The New Yorker.2017, April 3.

- Chockley KEE. The end of radiology? Three threats to the future practice of radiology. Journal of the American College of Radiology. 2016; 13(12):1415-1420.

- Xu J. 200x Low-dose PET reconstruction using deep learning. arXiv:1712.04119v1.

Related Articles

Citation

E S.Demystifying Artificial Intelligence: Simplifying AI and Machine Learning. Appl Radiol. 2018; (5):26-28.

May 8, 2018