The integration of artificially intelligent technologies with breast imaging

Images

Radiomics and deep learning (DL) tools are making inroads into breast imaging. Several DL-infused breast screening and imaging technologies have recently received FDA 510(k) clearance, with more in the pipeline, while studies are demonstrating the value and potential superiority of deep learning compared to conventional techniques and models.

For example, a study in Radiology recently reported a new deep learning tool developed by researchers at Massachusetts Institute of Technology (MIT) and Massachusetts General Hospital that is able to better predict future risk of breast cancer in women compared to the Tyrer-Cuzick model, a widely available and well-studied model.1 The DL tool performed equally well across women of different ages, races, and family histories.

In a statement released by RSNA, the study’s lead author Adam Yala, a PhD candidate at MIT, says, “There’s a very large amount of information in a full-resolution mammogram that breast cancer risk models have not been able to use until recently. Using deep learning, we can learn to leverage that information directly from the data and create models that are significantly more accurate across diverse populations.”

Radiomics, which extracts mineable quantitative data from medical images for decision support, was central to a study by researchers from the University of Chicago, University of California San Francisco (UCSF), and the H. Lee Moffit Cancer Center and Research Institute. The study investigated combining mammography radiomics with a novel quantitative breast imaging technique based on dual-energy mammography to reduce false-positive biopsies. This technique, called three-compartment breast (3CB) imaging, was developed at UCSF by a team led by John Shepherd, PhD (now a professor at the University of Hawai`i Cancer Center). It captures a typical diagnostic mammogram plus an additional high-energy mammography image with a small in-image phantom to derive the water, lipid, and protein tissue composition throughout the entire imaged breast. These tissue composition maps can be visualized as images and provide biological water-lipid-protein signatures of suspicious findings as well as of normal breast parenchyma.

Karen Drukker, PhD, research associate professor at the University of Chicago, led the study that examined mammograms and 3CB images from 109 women with suspicious breast masses that were all biopsied. Of these 109 suspicious masses, only 35 were cancerous and the remaining 74 represented ‘unnecessary’ benign breast biopsies. Their mammograms were analyzed with a radiomics method developed by Maryellen L. Giger, PhD, and Dr. Drukker’s team, and the water-lipid-protein signatures were obtained from the corresponding 3CB images. Dr. Giger is a co-founder of Quantitative Insights, now Qlarity Imaging (Chicago, IL), which incorporated a similar radiomics technology for MRI into QuantX, the industry’s first FDA-cleared computer-aided diagnosis platform incorporating machine learning for the evaluation of breast abnormalities in breast MRI studies.

Drukker et al found that the combination of mammography radiomics and 3CB image analysis would have improved the positive predictive value of biopsy from 32 percent (35 of the 109 breast masses) for visual interpretation alone, to nearly 50 percent, resulting in 39 fewer ‘unnecessary’ benign biopsies or a 36 percent overall reduction in biopsies. The technique had a 97 percent sensitivity rate, missing one of the 35 cancers.2

“Most abnormal findings on screening and diagnostic mammograms are benign,” Dr. Drukker explains. “This is an imaging technique that also looks at the biology of the lesion to help facilitate better decisions by the clinicians. By combining 3CB image analysis with mammography radiomics, the potential reduction in recall was substantial.”

Although the approach and the 3CB technique are experimental, Dr. Drukker and her colleagues are also planning to study the technique on digital breast tomosynthesis (DBT) images in the near future. She is also interested in looking at the technique to examine other breast findings that may represent cancer, such as microcalcifications and architectural distortions.

If commercialized, the 3CB technique could be used with any full-field digital mammography system and would not require any new equipment, just some small modifications, including the addition of a mechanism that can pull the small in-image phantom into place, Dr. Drukker says. Although the study was focused on diagnostic mammograms, it could also potentially be used for screening mammograms after further investigation.

While emerging medical imaging artificial intelligence (AI) and machine learning (ML) technologies and results of studies published in peer-reviewed journals appear promising, many still need to be validated on sufficiently large external independent datasets (such as those provided in the Cancer Imaging Archive, https://www.cancerimagingarchive.net/), Dr. Drukker adds. Moreover, attaining promising stand-alone performance is a necessary first step for any computerized analysis method, but reader studies are needed to assess their impact on human performance.

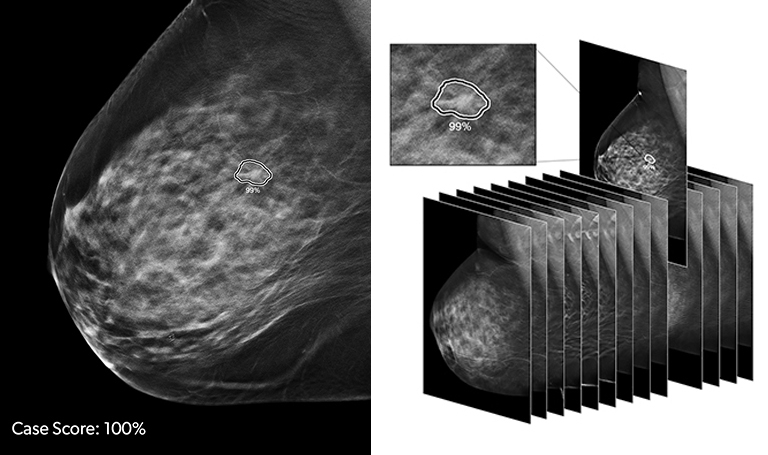

Deep learning cancer detection software for DBT

ProFound AI (iCAD, Inc., Nashua, NH) received FDA clearance in December, 2018, based on a clinical study finding the technology improved the accuracy and efficiency of digital breast tomosynthesis (DBT) screening studies. Profound AI is a deep learning software system for DBT that is trained to enhance cancer detection by identifying malignant soft tissue densities and calcifications. It is used during reading to help radiologists score the likelihood that a lesion is malignant based on a dataset of over 12,000 cases and 4,000 biopsy-proven cancers used to train the algorithm, according to Stacey Stevens, president of iCAD, Inc.

In the study led by Emily Conant, MD, professor and chief of breast imaging from the Department of Radiology at the Perelman School of Medicine at the University of Pennsylvania in Philadelphia, the use of ProFound AI increased the reader’s sensitivity by 8 percent and lowered the recall rate by 7 percent. As important, the solution cut reading time in half when used concurrently with DBT studies.

“Improving performance is always important for our patients, especially if we can find cancers earlier at a more treatable stage due to improvements in sensitivity, or if we can more confidently recall fewer women who don’t have cancer due to increases in specificity,” Dr. Conant says.

The combination of improved performance coupled with decreases in reading times may benefit both radiologist and patients, adds Dr. Conant.

“The improvement in reading time mostly helps with efficiency but could conceivably help patients by allowing radiologists to spend more time on more complex cases. However, the fact that we could, in general, reduce reading times and improve performance is a double win,” she says.

Studies examining Profound AI are ongoing, says Stevens, and the deep learning technology used in the solutions allows for continuous improvement. “Even though we launched the product, we continue to train it on additional datasets and expect to see even further improvements in performance in future releases,” she says.

Unlike prior CAD solutions that analyzed 2D data sets and were built with machine learning algorithms, deep learning algorithms learn progressively more about an image as they run data through each layer of a neural network. The more data the algorithms have, the more they learn.

The real value of the tool, Stevens adds, is that the solution is always on, evaluating each slice in the 3D DBT dataset and calling the radiologist’s attention to suspicious areas as the images are being read.

“A radiologist has to look at hundreds of image slices of the breast, and that can be tedious and time consuming,” says Stevens. “Cancer can be hiding in any of those slides. Profound AI is a tool that backs them up and give them the extra confidence.”

Like any new technology, those infused with AI and ML need to be well-researched to help guide evidence-based decisions. While ML can help in many areas of breast imaging, Dr. Conant says it is best to take a step-wise approach to figure out, first, how it helps patients, and then, how it may help radiologists.

At Penn, Dr. Conant is helping to develop deep learning algorithms to better characterize breast density and to address the complexity in risk assessment and detection. Algorithms are being developed to flag cases with a low likelihood of malignancy to help prioritize reading lists by case severity or identify patients who may benefit from supplemental screening. An AI- or ML-based program may better identify these patient subgroups because it is more consistent and reproducible than a qualitative, visual evaluation.

“We are looking to create measurements that are more robust and reproducible than subjective assessments made by the radiologist such as BI-RADS density assignments,” Dr. Conant says.

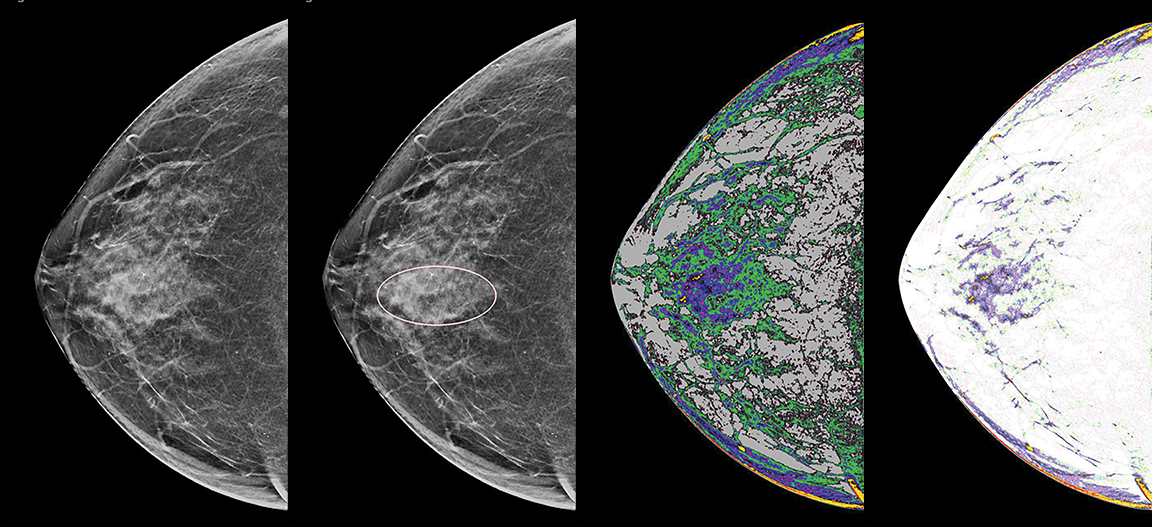

From bomb detection to cancer detection

Thomas Ramsay, CEO of Imago Systems (Lansdowne, VA), spent his career developing image analysis for a variety of applications, including explosives detection through analysis of X-ray images of carry-on bags at airports, fingerprint image processing for the FBI, the restoration of Thomas Edison’s movies for the Library of Congress, and more.

Ramsay’s interest in breast imaging began when his cousin’s daughters both died of metastatic breast cancer.

“I was really touched by that, and knew I needed to do something, so it doesn’t keep happening to others,” Ramsay says. “My brother, Gene, and I thought how can we look at this differently? We can’t go down the same path and so we looked at ways to reveal the geometry of tissue structures.”

The key, he says, was to write an algorithm that didn’t try to find something in an image; rather, it was to expose the geometry and patterns in an image. The concept is similar to processing a photo in a darkroom, Ramsay explains, where the chemistry that exposes the silver particles in the paper reveals the image.

Biological systems don’t reflect classic geometry; instead, they demonstrate fractal patterns, which are never-ending patterns that repeat across different scales in an ongoing loop, says Ramsay, whose solution is based on fractal geometry.3

“Cancer expresses differently from normal tissue,” he explains. “We are providing the radiologist with the ability to see patterns of normal and abnormal structures in the whole breast, in dense tissue, so they can see the unique patterns where they couldn’t see them before.”

The result is ICE Reveal, a patent-pending technology that provides a contour map of the breast tissue that enables the identification of areas with rapid change of density, reveals structural characteristics within and around a mass, displays dense concentric patterns within cancerous tissue and, through a color map, differentiates normal tissue, cancer margins, and the internal geometry of a cancerous mass.

Within the solution are three core algorithms. The “Relief” algorithm provides a 3D map of the biological structures in a mammographic image to reveal the tiniest of geometries. Ramsay says it can show the presence of a mass that is masked by calcifications and even show the mass developing at an early stage when only the calcifications are visible to the human eye. The “Canyon” algorithm can depict the margins in microlobulations within dense tissue, which often look “foggy” on a mammogram. And the “Textures” algorithm reveals micropatterns that each tissue expresses in response to the algorithm that allow a clinician to differentiate abnormal from normal, even in mammograms that are heterogeneously dense.

Imago Systems recently announced a multi-year agreement with the Mayo Clinic, which will support clinical trial development and oversee the company’s reader studies for mammography. The agreement includes a financial investment from Mayo Clinic, which is currently evaluating the solution under an Institutional Review Board (IRB) application.

Ramsay says he is completing a Phase 1 study with the National Cancer Institute that is examining whether his technology can reveal cancer progression in vivo, without biopsy or genomics, in research animals. In some cases, the subject is followed after administration of immunotherapy to determine any cancer regression, suggesting future potential in drug development.

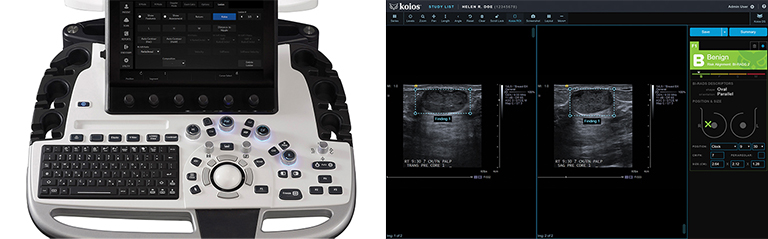

Artificial intelligence-infused decision support

Ultrasound is a valuable breast imaging tool, particularly in dense-breasted women; however, the imaging results can be impacted by operator variability. Koios Medical (Chicago, IL) recently received its second FDA clearance, this time for using Koios DS (Decision Support) Breast 2.0 at the point of care. The patented software comprises several algorithms that aid clinicians in diagnosing and classifying breast lesions in ultrasound images.

“The accurate interpretation and analysis of breast ultrasound is our initial focus,” says Chad McClennan, CEO, Koios Medical. “We are providing decision support on whether something is dangerous or benign. It’s a second opinion and consultation from an expert system to reduce that variability between readers.”

Koios DS Breast 2.0 has been trained on over 400,000 breast ultrasound studies of pathology-proven benign and positive lesions. It can be run directly on the ultrasound system, as is the case with the GE LOGIQ E10, or within a PACS viewer, including systems from FujiFilm Medical, GE Centricity, Sectra, Visage Imaging and others. Findings can be saved and exported to reporting systems to enhance reading efficiency and ease retrieval for follow up. According to McClennan, the company offers a vendor-agnostic, viewer-based solution that can be downloaded and configured either by Koios or radiology IT administrators—and is also available directly on select scanner hardware. The goal is ultimately to provide a plug-and-play PACS viewer version that could also be available in the exam room, although McClennan acknowledges that putting it directly on the hardware requires extensive technical development.

The Koios DS solution is offered as a subscription-based model; McClennan says that’s important for continuously improving and regularly pushing out enhancements, primarily those related to workflow. In 12 months, the company issued four different feature upgrades based on user feedback.

“If AI can help in medical imaging, we believe it can help the most in ultrasound, where there is a lower resolution and greater variability across users,” McClennan adds.

Although the company is focused on image interpretation, McClennan sees an opportunity for the technology in cancer detection as well as in thyroid and other ultrasound imaging applications.

From breast density to analytics

Volpara Solutions (Rochester, NY) is well known for its automated breast density assessment and enterprise quality and workflow monitoring solutions. This summer, the company moved into reporting systems with its acquisition of MRS Systems and into breast cancer detection with a distribution agreement for Screenpoint Medical’s Transpara products. Transpara received FDA clearance in November 2018 for reading screening 2D mammograms.

“MRS has an end-to-end data solution that, when combined with the VolparaEnterprise software, will help personalize the patient journey through screening, diagnosis and treatment,” says Mark Koeniguer, Volpara’s chief commercial officer and president of US operations. “Screenpoint adds AI and clinical decision support tools which can further improve productivity, detection, lesion management and workflow.”

The goal, Koeniguer says, is to leverage all the data possible in mammography with the use of AI and decision support to help approach the detection rates of breast MRI. Volpara is also working to improve reading workflow by bringing together the different data provided by each solution—from breast density to AI-augmented image analysis—so that the cases flagged as high risk appear first on a radiologist’s worklist. That is something Koeniguer says resonates with Volpara’s customers.

His vision is for the company to provide an AI-powered cancer screening platform that helps improve clinical productivity and enables more confident reads.

“We are focused on providing the right tools so the radiologist can predict which women, based on physiological and health history, may be at higher risk, so they use the right imaging tests and AI tools to ensure maximum cancer detection rates,” he says.

References

- Yala A, Lehman C, Schuster T, Portnoi T, Barzilay R. A Deep Learning Mammography-based Model for Improved Breast Cancer Risk Prediction. Radiology. 2019 Jul;292(1):60-66.

- Drukker K, Giger ML, Joe BN, et al. Combined Benefit of Quantitative Three-Compartment Breast Image Analysis and Mammography Radiomics in the Classification of Breast Masses in a Clinical Data Set. Radiology. 2019 Mar;290(3):621-628.

- Fractal Foundation. What are fractals? Available at: https://fractalfoundation.org/resources/what-are-fractals/. Accessed on September 4, 2019.

Citation

MB M,. The integration of artificially intelligent technologies with breast imaging. Appl Radiol. 2019;(5):32-36.

September 25, 2019