Image visualization: Selecting a vendor and coping with multidetector and multislice data sets

Images

Dr. Boonn, is Chief, 3D and Advanced Imaging, Hospital of the University of Pennsylvania, Philadelphia, PA

There are many factors to consider when selecting a vendor for advanced visualization software. In this talk I hope to outline the major purchasing points that should be considered and I will present strategies for coping with multidetector and multislice data sets.

First, these solutions have undergone a significant maturation, and at this year’s 11th Annual International Symposium on Multidetector Computed Tomography (Stanford MDCT) conference in May, most vendors did a good job at completing all of the tasks in the Workstation Face-Off. The Workstation Face-Off is an annual event where vendors compare how each major workstation can be used to view and analyze volumetric MDCT data. In previous years there were significant differences in performance and not all solutions were able to complete the required tasks in the allotted time.

While there are a range of solutions for the task of reconstructing CT data, not all are created equal and this talk will detail how to selecta vendor based on:

- architecture,

- applications,

- licensing,

- compatibility,

- workflow,

- enterprise distribution, and

- reporting.

Architecture

There is much published literature on which solution is better between thick- or thin-client solutions. Due to recent technological advances, the majority of practices will be best served by a solution with thin-client architecture. These offerings are more scalable than their thick-client counterparts. But how thin is your thin client? Some thin clients require users to download and install cumbersome software. If the intended solution is Web-based, find out its minimum bandwidth requirements. This information will help you determine how far you can distribute the system, e.g., if you will be able to use it to read from home or to read while connected over a mobile network. Regarding long-term cost, it will be helpful to know if you can perform incremental upgrades to the server as more users are added or if you will need to essentially double the server capacity to add more users. Finally, it helps to know what type of licensing model the vendor employs.

With that said, thick clients are not dead. They fill an important role in niche markets. They are well-suited to small practices where only a single radiologist or a single 3-dimensional (3D) technologist will be doing reformats. Those types of practices do not need to deploy a large server with the requirements to maintain thin-client data. Certain applications, like magnetic resonance imaging (MRI) spectroscopy,can only be performed on thick-client workstations. If your practice has a dedicated 3D lab, the technologists there could benefit from theperformance of thick-client workstations compared with having them utilize a shared thin-client resource.

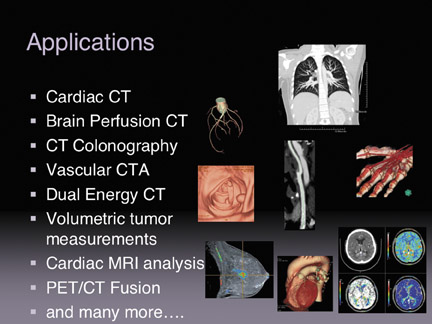

Applications

There is a growing list of advanced visualization applications (Figure 1) and it continues to grow on a consistent basis. The important poin there is to buy only what you will be using on a daily basis. You want to avoid what I term the “shopaholic syndrome,” and avoid applications that you will not need on day one. Take CT colonography, for instance, if your practice does not have a radiologist who is qualified to read CT colonography or if you have no referring client base for this type of imaging you should avoid purchasing it. Applications can always be added later when your practice establishes the need and/or ability to perform a certain type of imaging. Waiting to make that type of purchase means that you can likely acquire a later revision of the software, potentially with more functionality and at a lower price point.

Licensing

Licensing schemes vary tremendously. In the old days, vendors sold machine licenses, which only allowed specific users on specific workstations. This led to workflow inefficiencies. You can see the remnants of this trend in many reading rooms today, look for the dust-covered workstation that no one wants to read on because they have to get up from their regular image review workstation. The best licensing models are either hardware-limited concurrent user licenses or unlimited licenses/users up to a maximum hardware limit. The latter eliminates any user limits and allows practices to take full advantage of the hardware they have purchased.

Compatibility

In a perfect world, all data would work with all software. Unfortunately, this is not the case. We have scanners in our practice that produce data that cannot be accessed on other vendor’s software because of information stored in proprietary tags. Anyone who is attending a product demo should bring their own data sets to ensure compatibility. I regularly bring a DVD loaded with our typical data sets when evaluating software. These data are acquired with normal scan parameters. I also bring some of our more challenging data sets: those that are suboptimal due to artifacts, noise or motion. Almost any system can do a good job extracting a coronary artery from a perfect case. However, I like to test how the software deals with challenging cases and see how easy it is to manually correct errors.

Workflow

Advanced visualization products generally integrate well with PACS. But some advanced visualization solutions may require extensive customization to operate with PACS and it is beneficial to determine the cost up front. Plan a site visit at a facility that has the exact same PACS and RIS as you have. Many advanced visualization vendors promise some level of integration but it can be challenging, so it is important to see it in action prior to making a purchase.

you should also determine who will be doing most of the 3D work at your facility. Will it be a team of 3D technologists, modality technologists or radiologists? Will you deliver 3D on a collaborative or consumption based approach. In a collaborative approach, the 3D technologist prepares a case, for example doing bone removal and vessel extraction, and then the radiologist, working directly in the 3D environment, makes a diagnosis and has the ability to fully manipulate the data set in order to reach that diagnosis. A consumption-based approach sees the technologists creating static 3D models and saving them directly to PACS for the radiologists to review.

Enterprise distribution

Advanced visualization is not just for the radiologist anymore. Enterprise distribution is becoming increasingly necessary: many neurosurgeons and orthopedic surgeons are no longer satisfied with viewing static models created for them. They want to manipulate and rotate the images themselves to help in surgical planning. Thin-client systems are essential for this type of distribution and a Web-based client is even better. If you can find a solution that does not force the user to download and install client software, you can also dramatically reduce tech support calls. In general, a balance needs to be struck when providing these services to the enterprise. Some users will want the full-featured functionality that the radiologists use. However, delivering such a complex system to all users could result in increased support and training costs.

Reporting

In this supplement Dr. Rubin will address the increasing push for quantitative imaging results in radiology. We are routinely providing quantitative results in our reports when we perform volumetric measurements of lung nodules and when we provide detailed measurements in aortic stent graft planning. Vendors can let you create beautifully annotated reports. You can export them in a Microsoft Word or a PDF format, but for me that is useless since I need to dictate that report into a speech recognition system or a standard, transcription-based dictation system.

For example example, if I am comparing a prior and current examination of lung nodules, I can use a single-click volume extraction tool to generate measurements in about 5 seconds. The report, however, will take me much longer to dictate. Additionally, there are multiple potential sources of errors because the numbers in the report are stored digitally in the 3D analysis software. Ideally I would be able to export them into a structured reporting template to reduce dictation time and errors.

As Dr. Rubin will discuss, there is an emerging Annotation and Image Mark-up standard (AIM) for measurement and annotation results. This standard has the potential to optimize reporting workflow in 3D, where radiologists could initially make measurements and have them stored in AIM format. Those documents could then be sent to a structured reporting tool to automatically create a report. They could also be sent to a database to facilitate future research and follow-up cases, so radiologists would not need to re-measure the nodule the next time th epatient comes for a follow-up study.

Coping with large data sets

One of the major issues with data sets is the growth in data set size. In the 1990s, a head CT was 25 slices. In the early 2000s, a trauma CT was around 1200 slices. Today, a multiphase CT study could easily be 6800 slices or more. We have significant data overload.

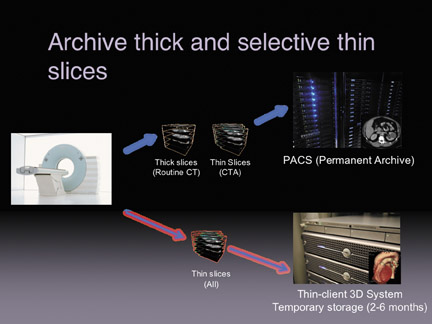

There are several strategies to store thin slice data sets (Figure 2). The first strategy is to archive only the thick slices and send the thin slices to a thin-client temporary archive. Information on the temporary archive will eventually expire in about 2 to 6 months. The problem occurs if the patient comes back a year later, at which time there will be no ability to do volume measurements on the original study. An intermediary workaround calls for archiving thick slices and certain thin slices to PACS. So for routine CTs of the abdomen or pelvis, facilities that employ this strategy will generate both thick and thin slices And store them separately (thick to the PACS and thin to a temporary 3D archive). The difference is that CT angiography procedures would be stored to the PACS as thin-slice data, this would allow for long-term follow-up.

With storage becoming cheaper and with increasing use of 3D by radiologists, it is becoming more important to find strategies to archive both the thick and thin slices. Other facilities maintain a completely separate archive for thin-slice data, especially in cases where a legacy PACS with an older architecture cannot handle large data sets effectively.

A better option, with a more modern PACS, is to archive both the thick- and thin-slice data in a central archive.

Conclusion

The pinnacle, however, could be to archive all of the raw data and enable on-the-fly reconstruction at any point in the future. This would allow future users to select the slice thickness, the kernel, the field of view, the phase of the cardiac cycle, and many other parameters as if the scan had just been acquired. This would eliminate the need to prospectively choose the image parameters at the time of original reconstruction.

We are a few years away from this capability but it will enable more thorough evaluation and you will not have to worry about thick slices or thin slices; you will just have data to interpret. However, the raw data is several gigabytes (GB) in size. Looking at a multi-phase cardiac CT, where you have multiple phases and multiple kernels, the total data set size for thin-slice reconstruction can far exceed the size of the raw data.

Discussion

ELIOT L. SIEGEL, MD: Bill, that was an excellent overview and a great presentation. So when you say thin client, I’m not sure everybody knows exactly what you mean. And also give us a little more description about server-side rendering.

DR. BOONN:The most important factor in thin-client vs. thick-client processing is that with thin-client architecture, the data set and the processing, the manipulation, the calculations and the rendering of that data, occurs remotely from the actual PC or system that you are using at that moment.

DR. SIEGEL:So your PC is opening up a window into another computer that’s actually doing the processing, and the graphical manipulation?

DR. BOONN:Correct. A thin client would be the equivalent of your Web browser. And if you use a Web-based e-mail system, for example, all of your e-mail is stored on a server, and when you open up a Web browser, you’re not downloading all of that e-mail onto your client PC. It’s a window into the system. All the processing occurs centrally. Some of the advantages of that type of system are that you really can optimize it for performance and rendering ability. You don’t have to transfer a particular data set to multiple locations. For example, if you have several reading rooms, where a radiologist may be doing 3D manipulations, if you had thick-client workstations in each of those rooms, you would actually have to send that data set to each of those workstations; whereas, with a thin-client architecture, you can send those data sets to a single, central, thin-client repository, where all of the manipulation can be done.

DR. SIEGEL:Is that more demanding of network resources? Do I have to upgrade my systems or infrastructure for that?

DR. BOONN:It depends. The individual server that you’re using is going to be a very powerful, probably fairly expensive server. But the clients that you’re using to access the data can be much less powerful. In terms of overall network utilization, by not needing to transfer large data sets to multiple different locations, you’re decreasing network utilization. However, the one disadvantage of a thin-client system is that you’re bound by your network. If you have major network problems, where you have frequent cut-outs or your network is fairly slow, then your performance may also be impacted. With a thick-client workstation set-up, once you transfer all of the images to that particular workstation, you would still be able to do your work even in the event of a complete network failure. So there are advantages and disadvantages to both types of systems.

DR. SIEGEL: Vikram, one of the things that Bill didn’t really delve into in a lot of detail is image quality. He talked about taking a CD or DVD of some of the images from his institution, and testing to see whether or not an advanced visualization system would have the capability of being able to display those images, but how about the quality of the images themselves? Is it a commodity now, or is there a difference from one vendor to another, as far as quality is concerned? How can potential customers tell the difference?

VIKRAM SIMHA:I think image quality is a big issue. I think there needs to be a standard to address that. Every vendor says they have great image quality, and we say we have great image quality too. But image quality, in many ways, is subjective. And I think there is some work to be done to create a standard to measure image quality across vendors.

KHAN M. SIDDIQUI, MD:When you talked about storing multiple paradigms of thick-slice and thin-slice data, isn’t the ideal solution to just do your thin-slice imaging and have the ability for the software to manipulate it later as necessary? The other question is how do you see 3D imaging going into the enterprise? Is it purely a specialty role, only for neurosurgeons or orthopedic surgeons? Or do you really see the general practitioners considering its use?

DR. BOONN:Sure. So your first issue, regarding thin vs. thick slices: I think we’re probably on the same page in that my preference would be to get rid of the thick-slice reconstructions altogether. However, there are a couple of limitations, one of which is behavioral. There are a number of radiologists who would still prefer to read their 5 mmor 8 mm thick slices, because there are fewer images to go through. Unfortunately, not all systems have a capability of adjusting the slice thickness on the fly.

MR. SIMHA:But is that more of a navigation paradigm, in which it’s cumbersome to navigate through large data sets and more of an educational issue to show radiologists that they can navigate through the sets faster? Or is it truly that they prefer thick slices because of the number of images? Do they just want thick slices because it is difficult to go through thin-slice data sets?

DR. BOONN: I’ve run into both issues. There is clearly a performance issue. And as PACS and as 3D visualization tools improve, I think that that barrier is decreasing. If you just go back a few years ago, if you tried to load a 1200-slice CT exam into, at that point, a relatively modern PACS, it would take you a very long time to scroll through the entire study. So I think improving the software and improving visualization tools will certainly reduce that barrier. And, hopefully, those improvements will alleviate the behavioral issues or habits of only wanting to look at a handful of slices when reviewing a study.

And getting to Khan’s second point, about who is the target audience of enterprise distribution of 3D: our experience has been that the people who have wanted to do the most detailed manipulations actually have been surgeons, oncologists, or other clinicians who are doing surgical or therapy planning. As this technology continues to improve, and also simplify, we may see a lower barrier and more utilization that will make it easier to distribute advanced visualization tools to the general practitioner.

I think a lot of our general practitioners really appreciate when we create a number of 3D images, so that they can use it to communicate their findings to their patients. And even if they’re not doing the manipulation on their own, the 3D images that we create can actually be useful in clinical practice. As we move to simplify some of the user interfaces and offer more Web-based solutions, I think that it would be great to be able to offer 3D capabilities to all of our referring clinicians.

DR. SIEGEL:Bob, do you see, in another 5 years, that the distinction between 3D or advanced visualization and PACS will become blurred or nonexistent? At that time, will this all just be seen as standard PACS functionality? Or do you think there’s going to be a role,for some sort of third-party add-ons to PACS, to give us these really cool features?

BOB COOKE:You have to question the term “advanced visualization.“ To a certain extent, a lot of the routine visualization that is done is ubiquitous across applications. So maximum intensity projection (MIP) and multiplanar reformatting (MPR) today are routine functions within the PACS. And increasingly, as the content of exams, especially the use of volumetric data sets increases, the demand to move those tools inside of the normal interpretation platform will only continue to increase. By the same token, obviously we can expect that, in the future, new modalities will continue to push the envelope, in terms of what is possible to see inside of the body; and new software algorithms will continue to be incubated that will allow us to analyze those things. So there’s always going to be this dynamic where the common interpretation platform will absorb the functionality of the more “advanced systems.” But, in addition, there will be cutting edge software that’s going to continue to be developed and incubated, that allows us to analyze this data in a unique way.And then, eventually, as those algorithms prove themselves, they will force themselves into the diagnostic process.